December 21, 2025

Building Cross-Functional AI Literacy: Training Creatives, Marketers, and IT

Ross Guthrie

Applied AI Strategist

AI summary

A guide to training creatives, marketers, and IT in AI for successful adoption and actual value creation.

When AI begins embedding across functions, the challenge is cultural as well as technical. That means calling upon teams to use AI in their daily workflows comes with the responsibility to build AI literacy.

AI literacy helps every team — creative, content, IT, and operations — understand how AI shifts decision making, verification, and value creation. It also unlocks value in the gaps between teams where work typically stalls due to fragmented context and misaligned timing.

It’s evolving roles from doers to coaches, guides, and mentors for teams of agents. These agents then open more creative approaches to solve issues, reach customers, and get great work in the market.

You don’t need everyone to become prompt engineers (though this is helpful), but you do need to build a shared understanding of:

What AI can (and can’t) do, and what it will and won’t get better at

Where human judgment and insight is necessary

How to collaborate with AI tools safely and effectively

How to recognize and respond to drift, bias, or error

By developing AI literacy as part of change management, organizations build teams that feel informed, included, and capable, rather than disrupted or displaced.

Key benefits of AI literacy in transformation

Confident, consistent use of AI across roles

Faster data-to-decision cycles and higher data value realization

Smarter collaboration between teams

Realistic expectations about AI’s strengths and limits

Safer, more responsible AI adoption at scale

Stronger sense of ownership and trust during transformation

How to build AI literacy in marketing and creative teams

AI fluency comes from the freedom for the individual to experiment and teams to engage in often messy, cross-functional collaboration to drive workflow definition and use case implementation. This can only happen when teams and individuals are given the space to experiment, collaborate, and work towards business outcomes they know they can affect.

This often means refocusing teams on AI at the cost of business as usual. Concretely, this means moving savvy individuals off of routine work that maintains value so they can focus on building new sources of value.

Here are the core components of building that innovation into your organization:

1. Tool-specific training

Teams ask, "Where will AI tools show up in our everyday tasks?" Because the answer is often "across multiple workflows and touchpoints," they need to understand which tool serves what purpose, how its outputs should be evaluated, and what its limitations are.

For example, if a research tool generates summaries but occasionally pulls from outdated or unreliable sources, teams must verify results before using them and develop strategies to mitigate the problem systematically. This goes beyond checking outputs, and involves understanding the tool's architecture well enough to know where it's likely to fail and building guardrails accordingly.

An uncomfortable truth: no AI solution is baked going to work perfectly out of the box. Whether you're building custom tools or using off-the-shelf platforms, there's calibration, output optimization, and ongoing learning required. The underlying technology is evolving rapidly, and what you consider one discrete workflow may actually require three, four, possibly five different models working in concert to execute properly.

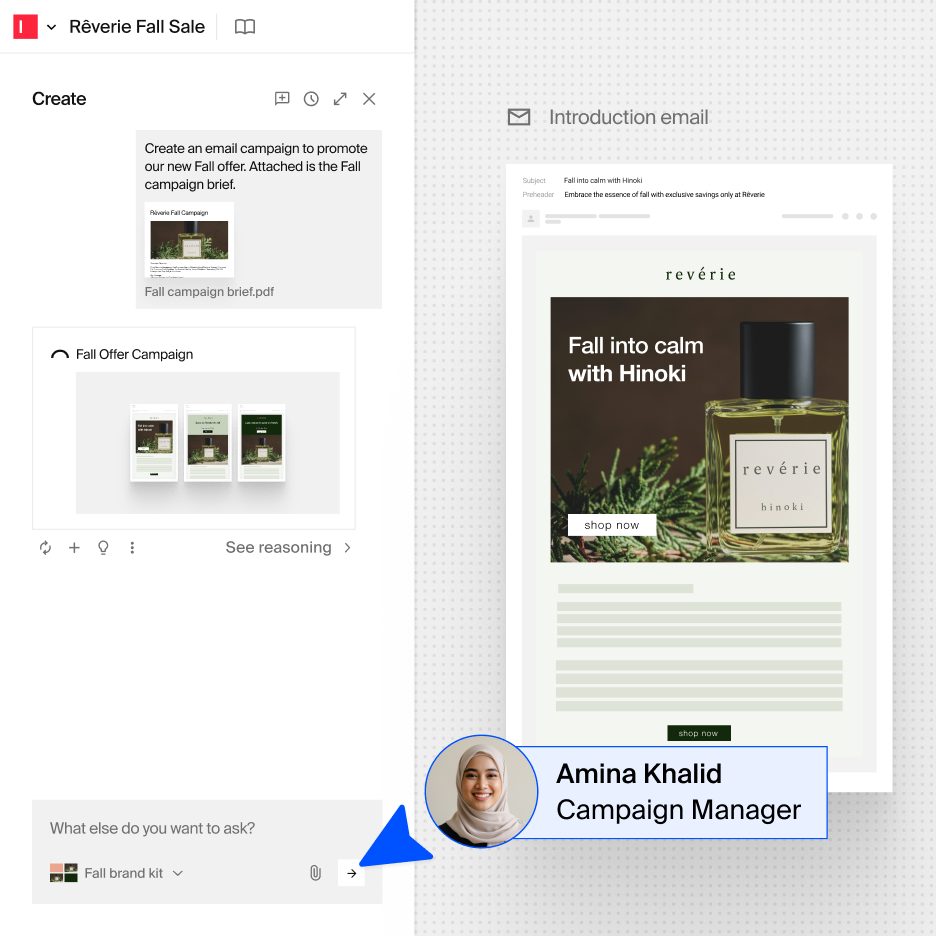

This understanding becomes critical when teams work with agentic systems like Typeface. When you use Typeface, you're directing AI agents to generate first drafts, blogs, email variations, social posts, ads, and web pages.

For instance, if you ask Email Agent to "Create an email campaign promoting our Fall offer," attach your campaign brief and brand kit, it will generate multiple variations. But to use this effectively, teams need to understand how the agent interprets instructions, what data sources drive generation, which elements of the brand kit influence specific outputs, and what levers they have to adjust results when the first draft misses the mark.

Related reading:

Email Agent: Turning Disconnected Workflows into Powerful Email Campaigns

2. Knowledge of prompts

Though you can use everyday language to instruct AI, how it understands you is different from how a person would.

Team members draw on shared context and common sense to understand intent and relate to tasks.

AI breaks your prompt into smaller words or phrases called tokens and matches them against patterns in its training data. As its knowledge is largely crystallized and pattern-based, it may miss the subtle intentions that people grasp with ease.

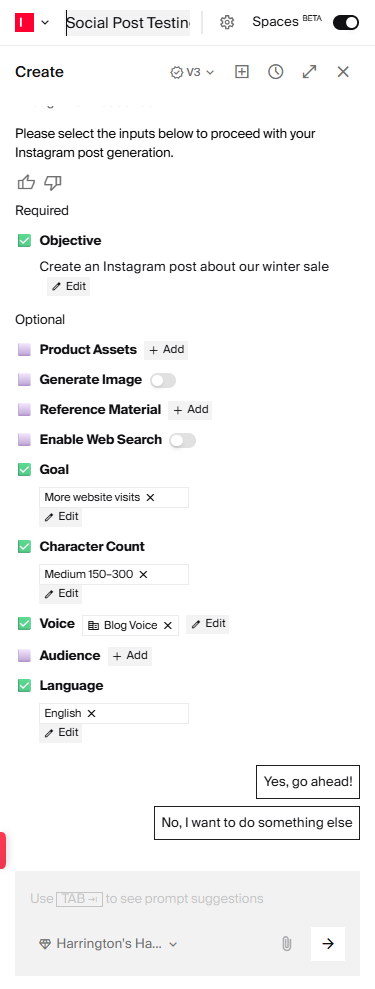

This is to say how you prompt AI decides the usefulness of its outputs. For summaries and blogs, which require explicit instructions, prompting knowledge is particularly helpful. For more open-ended tasks, like generating images, AI can interpret broad ideas but prompting knowledge still helps you guide style, detail, and accuracy.

With Typeface, you don’t need prompting expertise. The AI enhances basic image prompts to generate rich, detailed visuals. It also adds structure to blog prompts by asking for missing pieces like word count, tone, audience, reference material, and specific constraints. And provides you with the framework to build instruction, guardrails and output architecture that makes your outputs increasingly reliable. This improves output consistency (every blog is created with the same schema), and ensures content is grounded in factual sources.

How to use AI prompts effectively

Bad prompt: “Create a blog post about [product]”

Good prompt: “You’re an experienced B2B product marketer. Your role is to ingest a structured article brief and produce a complete, high-quality explainer article.

The article must meet both editorial and technical SEO standards, cite only approved references, and align with the defined tone, structure, and audience.”

[Provide brief with details about the blog post topic, structure, and sources]

Concepts to be aware of:

Prompt bias: Model outputs will shift based on how things are phrased.

Positional bias (grounding content): Models tend to overemphasize concepts earlier in the grounding content so topics later in the document can appear “washed out” in the output.

Mitigation techniques

Instruction anchoring | Repeat key directives at start and end | “Remember: summarize only factual claims.” |

Chunked retrieval | Feed smaller, thematically coherent sections | Use RAG memory rather than one long prompt. Example: Store brand guidelines in Typeface and apply them persistently rather than making them prompt-dependent, i.e., saying “Use a confident tone” every time. |

Weighted prompting | Reintroduce summaries before the final task | “Based on what you read, here are the 3 key points — now answer.” |

Schema alignment | Use structured templates | Keeps token distribution predictable across positions. Example: Typeface's AI agents support schema alignment by prompting for missing details like audience, word limit, grounding content, etc. |

Related reading:

25+ AI Blog Prompts to Write Blog Posts Faster

3. Create feedback loops

Feedback loops create a cycle of improvement: deploy, get results, learn, adjust, deploy new approach. They make users feel heard and invested, and help them use AI marketing platforms confidently.

→ Collect user feedback through periodic surveys or forums where your marketing team shares what they like and dislike about AI platforms.

→ Collect performance feedback by monitoring key indicators and using analytics to zero in on performance and update AI guidelines.

→ Measure and adjust your AI platform regularly. Set up a monthly or quarterly cadence to review outcomes from AI-augmented campaigns versus historical benchmarks. If you see improvement, set stretch targets. If you see underperformance, adjust your approach.

Related reading:

Enterprise AI Adoption for Marketing: Pilot to Production

4. Evolve team structure and skills

The goal of AI literacy is to both help users get comfortable with the technology as well as deepen AI proficiency — from writing effective prompts to interpreting AI-driven analytics. To enforce a structured approach to developing and governing AI use, many organizations create an AI Center of Excellence (CoE) to bring governance, training, model management, and vendor oversight under one umbrella.

A CoE builds the capacity to deliver AI training programs such as workshops, online courses, and expert-led sessions for content creators and campaign managers. It also makes sure knowledge is shared effectively, consensus is built, and organizational silos are broken down to promote collaboration across departments.

How to build shared AI literacy between marketing and IT

When marketing and IT understand each other’s worlds, improving AI outputs and evolving the tools becomes far smoother and a whole lot easier. Shared AI literacy through feedback and trainings bridges communication gaps and mobilizes cross-functional teams to integrate and use AI strategically.

What IT must understand about marketing

Say marketing submits feedback that content is off-brand. IT needs to understand what that means in context or know when to ask for clarification. If it’s not clear that “off-brand” refers to a tone that’s too casual or the use of disallowed phrases, IT can’t properly tune the guardrails.

Here are a few ways to build that shared understanding:

Marketing goals and KPIs: What success looks like and where AI can move those metrics, like faster creative testing or personalization across cohorts.

Brand, tone, and compliance constraints: What “on-brand” and “off-brand” mean, special considerations for highly regulated industries, approval workflows, and how teams manage marketing content risk.

Daily workflows: How content creation happens — from brief to creation to approval to launch — and the pain-points in both traditional and AI-assisted workflows.

Experimentation: Why marketers need rapid testing and content explainability to trust AI and adopt outputs.

What marketing must understand about IT

A marketer suggesting a “small improvement” to the AI platform in a Slack thread may not realize how much technical work or constraint is involved. AI literacy programs should give teams a practical understanding of how the platform works and the key AI and IT concepts needed to build mutual understanding and appreciation. Here are a few examples:

Key definitions: AI models vs. AI agents, training vs. fine-tuning, latency, rate limits, and data pipelines.

Data privacy boundaries: Data minimization and retention for AI-generated content, and ethical challenges in hyper-personalization, like algorithmic bias and data security risks.

Guardrails and risks: How hallucination controls, filters, policies, and human-in-the-loop workflows protect the brand.

Feedback loops and governance: How to log bad outputs (e.g., via a Linear ticket), how content scores are generated, how platform settings work, and how to evaluate AI content.

AI training strategies

Hands-on learning:

AI agent playgrounds or sandboxes for creatives and writers to experiment with AI freely

Peer-to-peer learning:

Pilot projects with a small team that uses AI tools regularly, is willing to help others learn, and can provide initial quality control along with user and performance feedback.

AI mentorship programs where experienced employees guide their peers in adopting AI platforms.

Self-directed learning:

Best practices webinars

A curated selection of YouTube videos on AI essentials (RAG, AI agents vs workflows, multimodal AI, etc.)

Prompt libraries

AI playbooks

Accelerate content velocity with Typeface

Marketing teams have readily taken to generative tools to get more done and to make their lives easier. However, much of this usage is 1-to-1, leading to uneven quality, fragmented efforts, and missed opportunities to know what others have learned.

To unify marketers, creatives, and IT around a single platform and unlock enterprise value, consider a full-featured agentic marketing platform. It should offer agents that orchestrate campaigns (not just create single assets), bring teams into a collaborative workspace, support at-scale brand and audience personalization, and assure enterprise-grade security.

Typeface fits the brief. Our enterprise AI marketing platform enables organizations to deliver on-brand, multi-channel campaigns through a brand intelligence system, specialized AI agents, and a collaborative workspace. Teams using Typeface can create content faster, dominate feeds, lead in thought leadership, and let their creativity soar.

We support product and AI education through an AI Academy, templates, guides, reports, blogs, and webinars, helping teams deepen their understanding of AI-powered marketing. Explore Typeface with a product tour, read our latest report, or see how Typeface stands out from competitors.

Related articles

August 29, 2025

Implementing AI in an organization is a delicate balancing act. To actually transform operations, a new technology should naturally integrate into everyday workflows. Rolling it out across multiple ph

July 24, 2025

The worst thing that can happen in an Amazon exec review isn't getting your numbers wrong. It's getting asked: "Tell me what you mean by this word." I learned this the hard way when presenting to exe

September 16, 2025

In enterprise marketing today, standing out is no longer about shouting the loudest but rather saying exactly what your audience wants to hear. Content personalization plays a massive role in making o