August 29, 2025

Enterprise AI Adoption for Marketing: Pilot to Production

Katie Murphy

Customer Success Manager

AI summary

A successful enterprise AI adoption plan for marketing involves a phased rollout, starting with low-complexity pilot use cases, building cross-functional teams, and training AI on brand assets. By mapping workflows, setting clear success metrics, and maintaining human oversight, organizations can refine, scale, and govern AI implementation for long-term impact and efficiency.

Implementing AI in an organization is a delicate balancing act. To actually transform operations, a new technology should naturally integrate into everyday workflows. Rolling it out across multiple phases in a limited environment is the best approach as it minimizes risk, maximizes learning opportunities, and builds trust among users.

Let's look at how both marketing leadership and end users can successfully plan and guide AI implementation from pilot to production.

AI rollout strategy: Key points for leadership

Everything starts at the top. Without a clear vision or adequate planning, AI pilots can leave everyone confused, struggle to build consensus, and fail to reach production. It’s entirely avoidable if leaders establish a few key things from the beginning:

Use cases

AI has plenty of marketing use cases, but not all processes need AI. Teams need to define where AI will be used and add value before launching a pilot. Without this foundation, pilots lack a clear target and scaling becomes directionless.

Success metrics

For enterprise marketing teams, AI is an incredible production tool. But how do you use its ability to create at scale to generate meaningful outcomes?

Will producing ten articles each week using AI rather than the usual 2-3 with just human effort actually improve marketing results? Or does AI's value lie in creating personalized content for high-performing accounts in half the time?

Defining success metrics upfront drives pilot projects toward meaningful results rather than just impressive output volumes.

A cross-functional AI “tiger team”

A tiger team is a small, cross-functional group that pilots promising AI use cases. It’s made up of functional experts who test the tool, offer their views, and guide implementation after a successful pilot.

A tiger team leading an AI marketing platform typically consists of the marketing lead, copy team, creative team, and a representative from the IT team. They train the tool on company data, define a prompting strategy, evaluate AI outputs, and suggest improvements.

AI implementation phases

AI fundamentally changes how work gets done. Marketers and copywriters take on the role of reviewers and devote time to more creative or strategic aspects of their work.

A phased approach helps the intended users of the tool ease into this shift rather than feeling overwhelmed with the change. They’re able to test thoroughly, learn as they go, and scale with confidence.

The 30-60-90 day AI implementation roadmap for enterprise marketing teams is built around this idea. It divides a pilot rollout into three stages:

First 30 days: Explore, prioritize, align

Next 30 days: Build, configure, and test

Final 30 days: Evaluate, refine, and plan to scale

Phase 1 (Days 1-30): Plan the pilot

In the first month, you develop your pilot project and keep it on solid ground. Key actions in this phase:

Identify high-impact, low-complexity use cases

“Shouldn’t I start with the things I most want AI to solve for?” is a valid question, but there’s strong logic behind starting with a low-complexity task that still addresses real bottlenecks.

At this stage, you’re proving AI works in your environment, and a low-stakes task suffices to make this point. Easy wins build team trust in the tool and allow safe learning without risking a major disruption. Ultimately, a successful low-risk trial builds momentum towards broader AI adoption.

Examples of high-impact, low-complexity use cases:

Email subject lines

Social captions

Ad copy

Blog feature images

Let's also consider the counter-logic: why spend time on tasks that aren't your biggest pain points? You could start by testing what you need, but after considering the issues you might run into.

Say you’re using AI for a higher-complexity task with multiple moving parts like creative briefs, multiple review rounds, and tone nuances. Unless users are aligned on the prompting strategy, they may get outputs of varying quality from the same AI tool, making success unclear and delaying progress.

Build your tiger team

The success of any enterprise AI initiative hinges on the people driving it. Your tiger team will become your organization's first AI power users and tool experts, who guide peers and teams through training and optimization.

Select representatives from your marketing and IT operations to take action on the pilot project. This group should include your:

Head of marketing operations

Owns the project

Coordinates timelines and milestones

Tracks success metrics

Manages senior leadership communication and reporting

Marketing team members

Represents end-user perspectives and quality standards

Tests across marketing use cases, documents pain points, and iterates towards better outputs

Creative team

Tests, improves, and documents AI’s visual output

IT representative

Handles technical integration, security requirements, and platform connectivity.

Map entire workflows, not just tasks

While use cases are starting points to quickly show AI’s value, mapping workflows sets the stage for scaling AI strategically. For example, if your use case is generating social captions, you should also map the entire social campaign workflow to identify areas AI can improve, such as:

Handoffs from social team to legal

Speed, by auto generating caption variants for different channels or formats

Consistency, by ensuring brand tone and messaging rules match across formats

With Typeface, these steps happen within a connected workflow, one that can mirror your current process or be designed from scratch (in fast, easy steps) to unlock greater efficiency. The same Brand Kit and campaign brief can power content creation across channels, helping your team scale content without hopping from tool to tool.

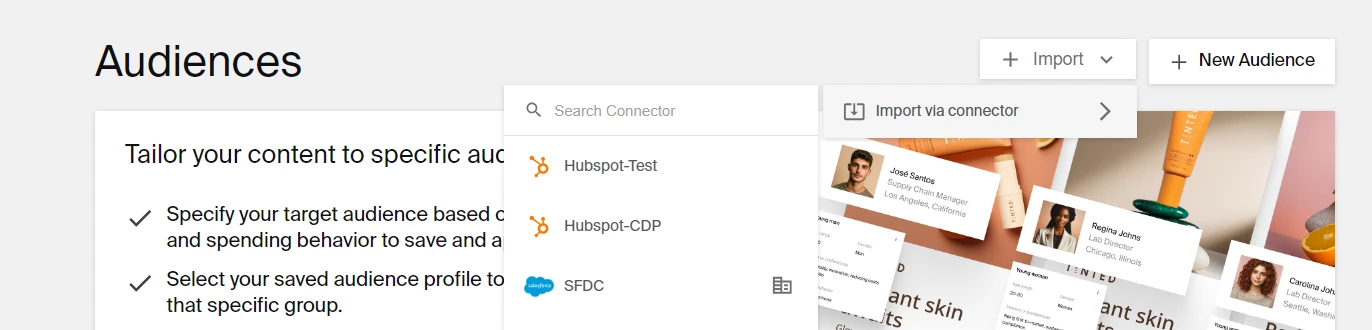

Assess AI readiness

Enterprises have several choices in AI marketing solutions. But what sets an AI marketing platform apart is its ability to use your own data to create accurate, insightful, and on-brand content. This is only possible if the platform works across your tech stack through APIs and data connectors.

So, it's essential to check your technical and data readiness before you start generating content using AI agents.

What to check for:

Are APIs available for integration?

Can the AI agent access the audiences, assets, or brand guidelines it needs from your existing systems?

Do you have identity and access controls (e.g., role-based access) to manage how users interact with the AI platform?

Can the AI agent's outputs be audited or reviewed by humans before going live, especially in regulated environments?

Typeface is designed to help teams to more easily create contextual content at scale. It streamlines workflows across email, paid media, social, and web channels through seamless integrations with your entire tech stack, including Salesforce Marketing Cloud, Google Ads Manager, HubSpot, Slack, and more. You can create unique content, shorten review cycles, and go live from a single hub.

Define success metrics upfront

The only way to be certain that AI is doing its job is to establish specific, quantifiable metrics, like:

Reduce time-to-publish by 40%, maintaining current engagement rates

Improve speed-to-market for reels and short-form video by 50%

Grow output by 30% using your existing team and workflows

Activate 3–5 new audience segments previously out of scope

Produce 5x more asset variations per campaign without increasing design workload

Generate custom product catalog images 4x faster for seasonal updates

Communicate plan to broader team

AI is no longer optional; it’s becoming embedded in how work gets done. But employees deserve to understand why your organization is adopting it, and what it means for them.

Open communication early on, grounded in your strategic goals and the value AI brings to employees, including acknowledging fears around AI adoption, helps build acceptance and alignment.

Further, even if the pilot is limited to marketing, the outcomes may affect other teams. Letting the broader team know what’s happening, what’s being measured, and why, creates transparency and increases buy-in.

Enterprise AI implementation: Results from phase 1

A clear pilot plan

Aligned team

Documented workflow

Initial success criteria

Ready to move into configuration

Phase 2 (Days 31-60): Develop and test pilot

Now your team is ready to launch the pilot. In the next 30 days, you’ll build a working prototype through testing, iteration, and workflow evaluation. Keeping to the “start small” approach, limit scope to one region, channel, or product line. Key activities in this phase:

Train AI agents on real data

AI marketing agents are designed to perform a specific task, like generate a blog and follow workflow steps typical to blog-writing: create an outline, provide SEO suggestions (keywords, competitor outlines, and people often ask questions), draft the blog, and calculate a performance score based on specific criteria.

While the agent knows what to do, it needs to learn how your team creates content, with some help from you. Phase two of your pilot lays the foundation for training AI and reviewing whether outputs meet your quality standards.

Help AI learn your brand voice and visual styles

Upload past top performing articles and images to provide AI with sufficient performance context to create content that matches your team's output. Use your Brand Kit during AI generations to create on-brand, personalized content. Compare outputs with and without the image and voice styles, and refine iteratively until you’re satisfied with the results.

Try repurposing

A popular AI use case for enterprise marketing is turning existing assets into fresh content. Repurposing is typically a low-complexity use case and safe to start with as it involves using approved brand assets. Connect your DAM to our platform and bring over your assets to start creating new variations.

Try scaling personalized content

AI can create content that speaks to a specific cohort. But it needs your audience data.

Connect your Customer Data Platform (CDP) and Customer Relationship Management (CRM) systems to Typeface to create targeted content: personalized emails, customized landing pages, retargeted ads, social media ads targeting specific interests, and more. You can also create dynamic audiences by adding the demographic and psychographic data for the segment you want to target.

Test AI on content compliance

AI can also ingest your brand guideline documents to create compliant content. When your pilot program launches, you'll want to monitor how reliably the AI follows your messaging rules and maintains legally compliant language. This is essential if you’re a healthcare, law, finance, pharmaceuticals, or manufacturing company.

Typeface rates AI-generated content based on how well it aligns with your audience and guidelines. Our AI content explainability feature helps your team optimize content faster and builds trust in the outputs.

Maintain human oversight

We’ve all interacted with popular AI assistants and recognize how human-like they can be. While AI is capable of creating engaging content, it's not perfect. Even when you give AI all the context it needs, you'll still want to review what it creates, just as you might do with any new team member or writer.

The pilot stage offers the opportunity to critically evaluate how the AI agent makes decisions and what it produces. Based on your assessment, you can improve your training data and fine-tune your prompts.

This is also when you can set up a feedback loop to make sure your quality standards are met. With Typeface, you can create connected workflows that send email and Slack notifications when content is ready for review or easily customize the default workflows to match your team's existing review and approval processes.

Go live, with limits

In the pilot stage, you’re testing the AI tool’s capabilities and learning how to get the best results from it safely and strategically. Putting guardrails around the workflow you’ve chosen to automate creates a safe space to experiment before scaling more broadly. So even if something doesn't work, it’s contained to a small area and your existing workflows aren’t affected.

Capture feedback

Create feedback loops to share the tool’s performance and use the inputs to optimize for better results. Review performance against the established KPIs to determine the tool’s value for your marketing operations.

Enterprise AI implementation: Results from Phase 2

A working prototype

Initial performance data

Clear insight into what's working and what's not

Phase 3 (Days 61-90): Refine and plan to scale

In 30 days, you can gather early impact signals. Use the initial AI agent experience and performance data to assess progress and determine whether to expand or adjust the pilot.

Key focus areas in this phase:

Measure impact

Good performance doesn’t automatically guarantee a meaningful impact. You want to revisit your success metrics and get qualitative feedback from your team. Did the agent reduce manual reviews? Did it reduce time-to-publish? Can you reallocate human effort to more strategic tasks? Get a complete picture of how AI is transforming your marketing operations.

Run a retrospective

A retrospective with your pilot team gives you the insights you need to improve. It tells you what you need to do when you scale. Key questions to ask:

What worked? What didn’t?

Where did we see the most impact?

What do we need to adjust for the next rollout?

What surprised us about AI agent performance?

What failed that we need to address?

What succeeded that we could replicate?

What would we do differently in the next implementation?

What organizational changes are needed to support AI scaling?

Determine the governance model that supports long-term AI success

A governance framework for AI is a rulebook for how AI must be implemented, used, and managed. Establishing it early builds accountability, compliance, and safety, and ensures these principles hold as AI gets more embedded in daily operations and gains new capabilities over time.

One of the first steps in setting up AI governance is to establish decision rights, like “What can the agent handle on its own and what requires human review?” You should also assign ownership for different layers of the system, like “Who trains and fine-tunes the agent?”, “Who monitors output quality?”, and “Who ensures alignment with legal and data policies?”

Create a scaling plan

If the pilot succeeded, expand the scope: identify the next workflow, channel, or region to activate. Set up quarterly check-ins to monitor performance and adjust your scaling approach.

If the pilot didn't meet expectations, revise, and retest based on lessons learned, focusing on creating a system that can be improved rather than perfected immediately.

Once you’ve validated a use case:

Document the process, tools, and lessons

Identify other teams (e.g., sales, HR, business development) that can benefit from the platform’s capabilities

Reuse templates, training data, and workflows

Ensure adequate support for expanded deployment

Allow sufficient time for training and adoption

Enterprise AI implementation: Results from Phase 3

A validated pilot

Organizational readiness for scaled adoption

A foundation for long-term AI development

FAQs

1. What are the critical steps for successful AI change management within a marketing organization?

Though successful AI change management has many moving parts, these fundamental steps cannot be overlooked:

Rethink your workflows: AI doesn’t always fit neatly into existing processes. Leadership must ask where AI can improve workflows or change them altogether.

Show the team what’s in it for them: The AI tool should address a real pain point for its intended users and be easier than their current workflow. If the tool checks both boxes, you’ve cleared a big hurdle to adoption.

Design your pilot for success: Rolling out AI tools safely requires collaboration between marketing, IT, and legal teams. Talk to your vendor to understand the best piloting approach and identify what you need to handle internally, like tool training, integrations, and iterations.

2. What skills should marketing teams develop for successful AI adoption?

A growth and learning mindset can drive your AI pilot toward successful adoption. Employees who embrace learning how AI can improve their daily workflows are more likely to help peers and leadership achieve better results. Their willingness to experiment, learn from mistakes, and keep improving can create the vital early momentum that spreads across teams.

3. How do you handle data privacy compliance with AI marketing tools?

Data privacy and security are table stakes; you need a few key confirmations from your vendor . For example, does the AI platform comply with data privacy regulations relevant to you, like HIPAA if you’re in healthcare or CCPA and GDPR, if they apply to your business? It’s also worth reviewing how well it supports integrations, single sign-on, and role-based access controls.

Typeface is enterprise-safe by design. It offers built-in guardrails to protect your brand’s content, workflows, and data, while aligning with industry standards for responsible, secure AI use.

Next Steps

Prepare for the future of work by putting your team first and adopting the right enterprise agentic marketing platform. Fortune 500 companies across industries use Typeface for speed, scale, and strategic automation that allows teams to focus on strategy.

Try Typeface with a demo or contact sales.

Related articles

June 16, 2025

Earlier this month, I wrote a white paper for Typeface called Agentic AI and the New Era of Marketing. It’s a piece I’ve been thinking about for a long time — because beneath the hype and headlines a

July 24, 2025

The worst thing that can happen in an Amazon exec review isn't getting your numbers wrong. It's getting asked: "Tell me what you mean by this word." I learned this the hard way when presenting to exe

June 16, 2025

Earlier this month, I wrote a white paper for Typeface called Agentic AI and the New Era of Marketing. It’s a piece I’ve been thinking about for a long time — because beneath the hype and headlines a