AI at Work

Why AI Rollouts Fail Without Clear Leadership Context (And How to Fix It)

Ross Guthrie · Applied AI Strategist

July 16th, 2025 · 14 min read

Picture this: You just invested six figures in a marketing AI platform. The tech is cutting-edge, the potential is obvious, and your team is eager to start creating better content faster. Three months later, adoption is at a mere 30%, your marketing team is frustrated, and leadership is questioning the ROI.

This can be an enterprise marketing leader’s biggest nightmare. AI adoption is imperative if you want to stay ahead of the game, but ensuring confident AI use across teams is still a challenge.

Case in point: According to HubSpot’s 2025 AI Trends for Marketers, 98% of organizations plan to increase investment in AI, but employee adoption still lags with just 51% eager to use AI.

Why do AI rollouts fail? Often, the answer to this is simple - AI doesn't fail; poor communication does.

The difference between AI rollouts that transform marketing operations and those that collect digital dust isn't the technology itself. More often than not, it's whether leadership provides the context, direction, and ongoing support teams need to succeed.

What happens when AI is introduced without clear vision?

AI can’t succeed without intentional effort. When CIOs or CMOs bring AI into the business without a well-articulated purpose, teams are left guessing. Are we using it to reduce costs? Speed up production? Improve personalization? Without answers, even the best tools under-deliver.

Working with enterprise leaders, we’ve noticed that they often have a high-level understanding of where they would like to apply AI and what kind of results they would like to see. For instance, reduced agency spending on low level marketing tactics, increased personalization, or scalability in ABM campaigns.

At an execution level, though, what is often lacking is a set of clear guiding policies and a coherent action plan to help their teams understand exactly how it will benefit their day-to-day work.

Marketing managers spend weeks figuring out basic functionality instead of focusing on how the AI platform fits their actual workflows.

Content creators worry about job security instead of embracing new capabilities.

Even leadership at times struggles to envision how they will get from the maturity level they are at today to a level that enables them to effectively apply AI to their business and achieve long term success.

The result? Slow adoption, inconsistent usage, and missed opportunities.

Common reasons AI rollouts fail without strong leadership

When it comes to AI rollouts, we’ve seen enterprise leaders experience some common points of friction.

Lack of defined success metrics

Most AI rollouts stumble when leadership invests in powerful technology but doesn’t define what "winning" looks like. Without clear success metrics, teams can't distinguish between productive AI usage and expensive experimentation.

The measurement gap creates several problems:

Teams optimize for the wrong outcomes. A content creator might focus on generating 50 social media posts per day using AI, thinking quantity equals success. Meanwhile, engagement rates plummet because the content lacks strategic focus. Without defined quality metrics, speed becomes the default measure of AI value.

Progress becomes impossible to track. Marketing managers can't report meaningful ROI when leadership hasn't established KPIs or baseline measurements. Is reducing content creation time from 4 hours to 2 hours a win? What about maintaining the same timeline but doubling the segments you reach? Without defined success criteria, every outcome becomes subjective.

Resource allocation suffers. Teams waste time on low-impact AI applications while neglecting high-value opportunities. For instance, they might spend weeks perfecting AI-generated email subject lines (marginal impact) while they could create entire customer journey email campaigns within minutes with Typeface Email Agent (transformational impact).

What good success metrics look like:

Instead of vague goals like "improve content efficiency," leadership needs to establish specific, measurable targets like:

Reduce average content production time by 30% while meeting current engagement benchmarks.

Increase content output by 40% without additional headcount

Achieve 85% brand voice consistency across all AI-generated content

Complete content calendar planning 2 weeks faster than previous quarters

Reach X number of previously underserved customer segments

Typeface makes this easier because it not only helps scale content production but also assists in measurement and reporting by letting you analyze the generated content.

Features like Content Insights that tell you how well your content is likely to perform in terms of SEO and audience alignment, or Brand Agent which tells you how closely the content follows your brand guidelines, allow marketing managers and CMOs to better assess AI’s impact.

Lack of an empowered team

Even with clear goals, AI rollouts fail when leadership does not give teams the authority and resources to make implementation decisions. Many organizations treat AI adoption as a top-down mandate rather than a collaborative effort that requires empowered team members at every level.

The empowerment gap manifests in several ways:

Decision-making bottlenecks slow progress. Teams discover they need approval for basic template modifications, brand voice adjustments, or workflow integrations. What should be quick iterations become week-long approval processes. Marketing managers spend more time requesting permissions than actually using the AI tools.

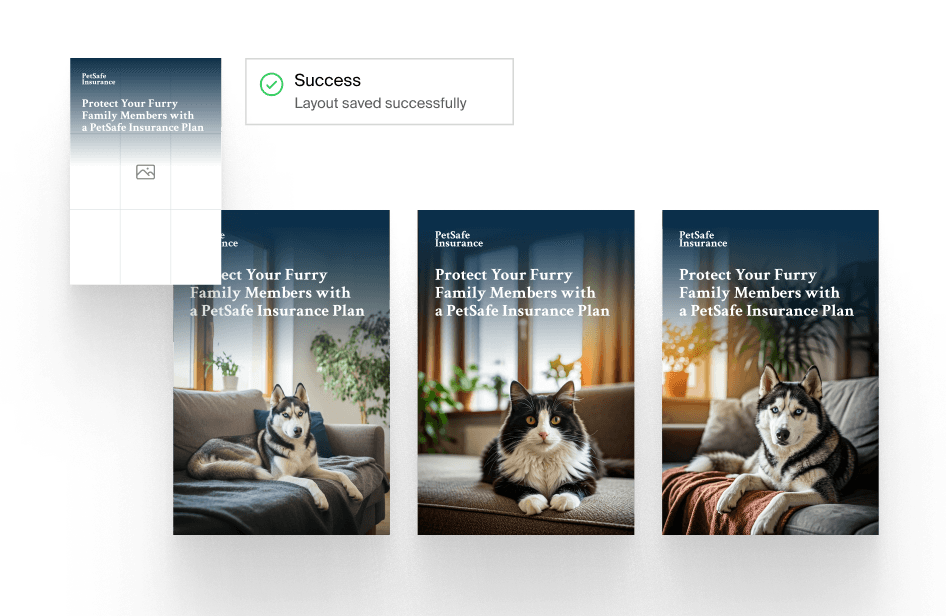

With Typeface, however, these approval bottlenecks can be easily avoided. By saving pre-approved ad and email layout as editable templates, preserving multiple brand voices like your social media voice or your CEO’s thought leadership voice, and establishing clear brand rules, you can save your team a lot of the back-and-forth.

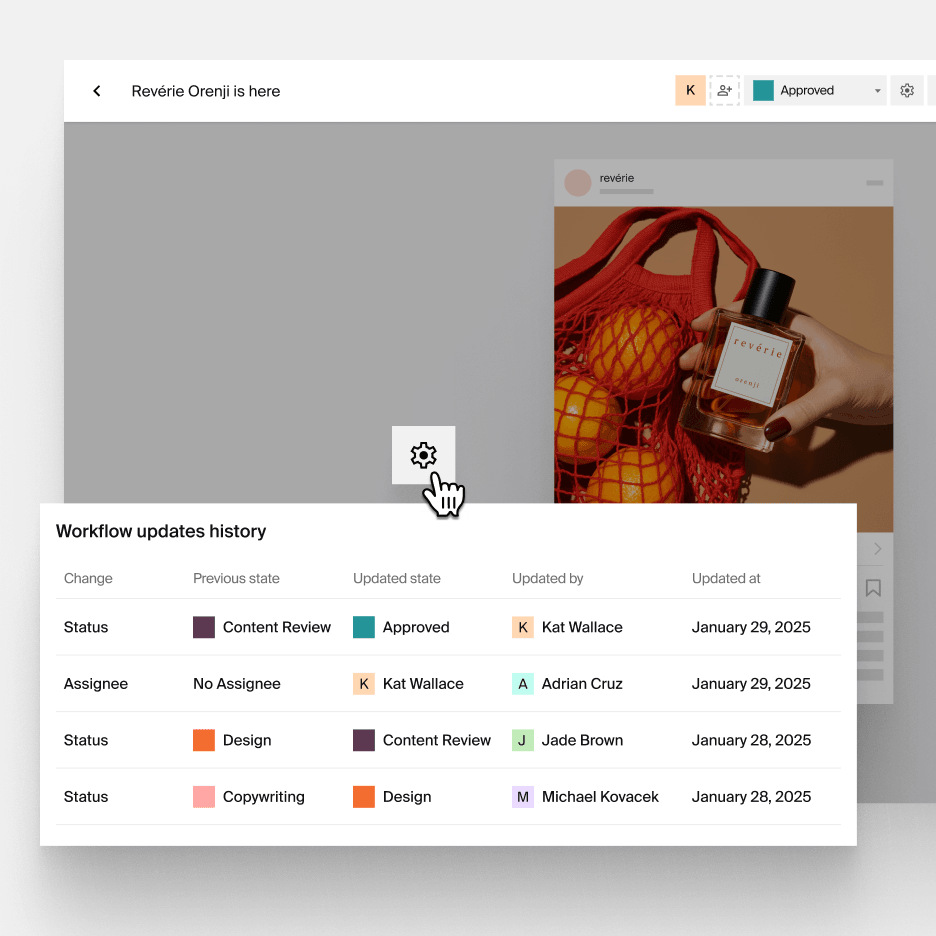

For high-stake processes that involve reviews and approvals, the content workflow manager makes handoffs and collaboration extremely smooth.

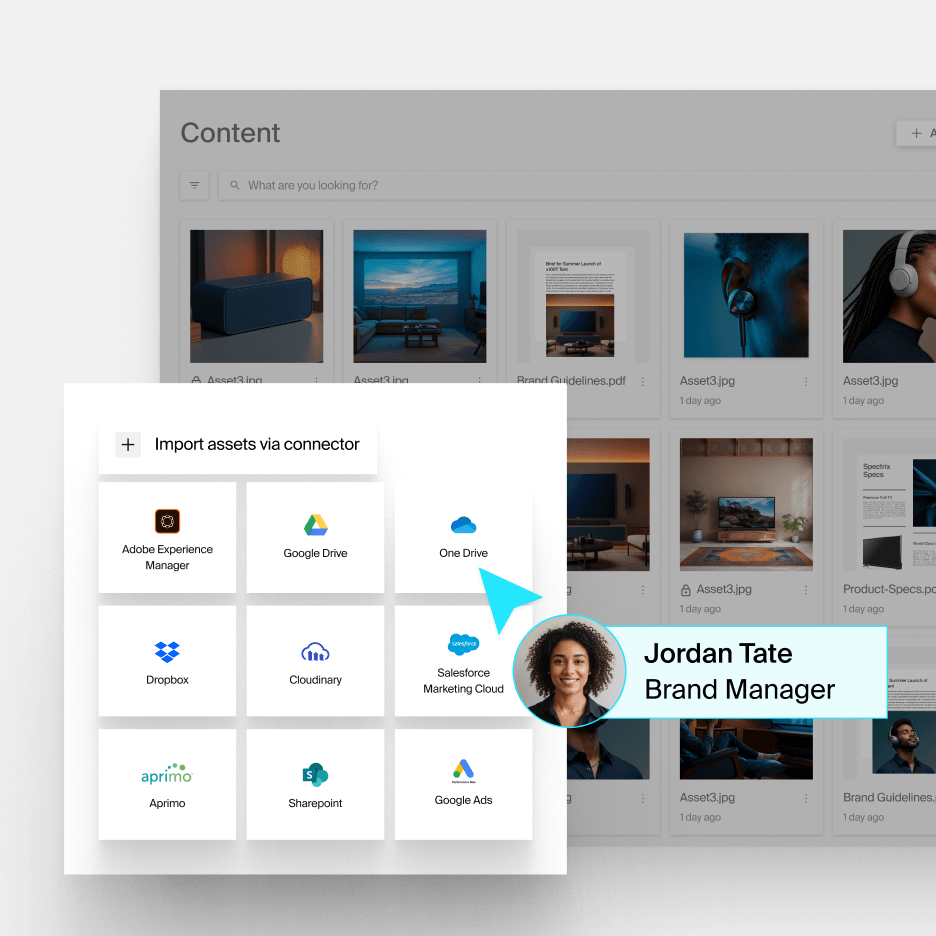

People have limited access to necessary resources. Teams receive AI tools but lack access to brand guidelines, content databases, or integration capabilities they need to succeed. They're asked to create consistent, high-quality content while working with incomplete information or restricted system access.

Typeface addresses this issue by acting as a centralized hub for all brand assets and databases. Marketing leaders we have worked with love how everything from their brand and marketing materials to their audience data to most of their existing tech stack can be integrated and accessed from one place.

There’s no authority to experiment and learn. Effective AI adoption requires experimentation. Teams need permission to try new approaches, fail fast, and iterate based on results. Without this authority, they default to safe, limited applications that deliver minimal value.

What empowered AI teams look like:

Successfully empowered teams have clear authority to:

Modify templates and content frameworks within established brand guidelines

Integrate AI tools with existing marketing systems and databases

Experiment with new use cases and share learnings across departments

Access necessary resources including brand assets, historical performance data, and technical support

With Typeface, you can provide your team with this level of autonomy without any risks, as granular role-based access control and complete audit trails on every content item ensure accountability at each step.

Unmanaged risk

Leadership sometimes fails to acknowledge that early-stage AI technology presents some real risks. Failing to build systematic approaches to manage and mitigate these risks can have severe implications for the team or the business as a whole.

The risk management gap creates several problems:

Teams either move too fast or too slow. Without clear risk frameworks, some teams rush to implement AI for high-stakes content like executive communications or major campaign launches. Others become so concerned about potential issues that they never move beyond basic experimentation. Neither approach maximizes AI's potential while protecting the organization.

Measurement frameworks ignore risk mitigation. Most AI success metrics focus on efficiency gains and output quality but fail to account for risk management. Teams optimize for speed and volume without considering brand consistency, legal compliance, or SEO impact. This creates a false sense of success that can lead to significant downstream problems.

There’s no systematic approach to learning from controlled failure. Teams need frameworks that allow them to fail safely while gathering valuable learning data.

What strategic risk management looks like:

Smart leaders build risk mitigation directly into their AI measurement frameworks and rollout strategies:

Start with low-risk, high-learning opportunities. For example, if your goal is to publish AI-generated blog posts but you're concerned about SEO risk, begin with long-tail topics where search volume is low, but potential return is high. This approach lets you test content quality and search performance without jeopardizing rankings for your most valuable keywords.

Implement multiple checkpoints that match the stakes; AI-generated social media posts might need one approval layer while blog content requires brand and legal review.

Monitor systematically over time. Track performance metrics for 2-3 months before expanding to higher-risk applications. This creates a data-driven foundation for scaling AI use while maintaining quality standards.

The role of CIOs and CMOs in driving AI success

Well-aligned leadership vision in AI transformation follows the same principles as any successful organizational change effort. It cuts across people, processes, and technology, connecting all three components in a way that targets early successes while building toward longer-term transformation.

The people dimension is critical because teams are the ones doing the work, and AI fundamentally changes how work gets done. They need a voice in implementation decisions, not just training on new tools.

Process alignment requires buy-in from the owners of the most rigid and exacting components of current workflows — the people who understand where flexibility exists and where it doesn't.

Technology evaluation needs frameworks that assess whether AI tools actually serve the needs of people and processes while enabling successful outcomes.

The most effective leaders borrow from transformation efforts that have succeeded in the past while recognizing that AI transformation uniquely impacts how people accomplish their daily work. This human-centered approach to change separates successful AI rollouts from expensive experiments.

What should leaders communicate internally before AI rollout?

Successful AI rollouts start with comprehensive internal communication. That’s how leadership sets the right expectations for success. Leaders should clearly articulate:

Strategic context: CMOs must map existing workflows, identify opportunities for acceleration, figure out what steps take the most time and why. Answer questions like - How does this AI tool support our broader marketing objectives? What specific challenges are we solving?

Success metrics: What does good performance look like in 3, 6, and 12 months? How will you measure progress? It is important to limit the scope for quick wins and expand the scope as wins accumulate.

Role clarity: How will AI change daily workflows without threatening job security? What new skills will teams develop? Most importantly, involve the people these changes will impact early on in the rollout.

Appointing a dedicated program manager with a product mindset can also be extremely helpful. They can be the technology expert and translate business needs into existing technical capabilities and relay feedback with the vendor.

They manage rollout programs and build experimentation approaches for future capabilities so that the team is focusing on outcomes enabled by current capabilities.

Real-world examples of effective AI alignment

The difference between successful and failed AI rollouts often comes down to how leadership defines success from the beginning. We've seen organizations make critical mistakes by assuming AI would immediately deliver the desired outcomes, while others succeeded by establishing realistic timelines and focusing on strategic opportunities.

Here are two specific examples from our experiences with customers.

A cautionary tale: When high standards meet unrealistic expectations

One organization invested heavily in AI for content creation but pulled the plug after just six weeks. Their mistake wasn't setting high quality standards — that's essential. The problem was assuming AI capability would immediately equal or exceed human performance without defining specific quality thresholds or setting realistic expectations with users.

The lesson here applies broadly: when implementing AI for high-visibility applications like major customer segments, start with areas where upside is positive and downside is minimal.

For example

For example

If you're enabling more ad personalization with generative AI, focus on customer segments that are relatively small but have significant upside potential. This approach lets you serve segments that might otherwise go unserved, where any improvement represents pure gain rather than risk to existing performance.

A success story: Clear goals drive collaborative adoption

Contrast that with an organization that approached us with laser focus. Their goal was specific and measurable: reduce blog creation time from 6 weeks to 1 week to increase overall content velocity and drive more aggregate traffic to their website.

This clarity enabled a collaborative approach where brand marketing became partners in the rollout rather than stakeholders managing in silos. The clear timeline and traffic goals allowed teams to focus on improving content quality and brand adherence systematically, rather than unveiling something that could be deeply misaligned with user expectations.

The key difference? Leadership communication that turned AI adoption into a shared mission rather than an imposed mandate.

Frequently asked questions about leadership context in AI rollouts

What does effective executive sponsorship in AI adoption look like?

Executive sponsorship goes beyond initial approval. It means:

Regular check-ins with team leads to address roadblocks

Public recognition of AI adoption wins

Budget allocation for ongoing training and tool optimization

Cross-functional collaboration to break down silos

Willingness to adjust strategy based on real-world usage patterns

What early success signals show AI is being adopted well?

Strong AI adoption shows up in both metrics and behavior:

Quantitative signals:

Teams confidently using AI in real workflows

Clear documentation of wins (e.g., time saved, campaign lift)

Faster content production timelines and reduced revision cycles for some content types

Qualitative signals:

Teams proactively suggesting new AI use cases

Positive feedback from internal stakeholders

Integration of AI workflows into standard operating procedures

How do high-performing teams collaborate across functions?

Successful AI implementation breaks down traditional silos. Marketing teams work with IT to optimize tool configurations. Content creators share templates and best practices. Data analysts help teams understand which AI-generated content performs best.

This collaboration doesn't happen on its own — it requires intentional leadership facilitation and clear communication channels.

How can leaders maintain alignment post AI rollout?

The role of leadership does not end at a successful rollout. To achieve long-term goals, constant monitoring and adjustments are crucial. Post-launch alignment requires consistent attention:

Conduct monthly retros to assess adoption gaps

Update teams on roadmap and evolving use cases

Highlight individual and team contributions to encourage buy-in

Contextual leadership is a competitive advantage

The companies that succeed with AI aren't necessarily those with the biggest budgets or the most advanced tools. They're the ones where leadership provides clear context, realistic expectations, and ongoing support.

Key takeaways for marketing and IT leaders:

Communicate the strategic "why" before focusing on the technical "how"

Set realistic 90-day milestones instead of promising immediate transformation

Invest in ongoing training and support, not just initial implementation

Create feedback loops to continuously improve AI adoption

Celebrate wins and share learnings across teams

Next steps: Start preparing now to align your teams around your AI adoption strategy. If you’re looking for a marketing AI platform that enables seamless collaboration with your team while ensuring strong brand governance and high-quality output, Typeface is a perfect fit! Get a demo today to see for yourself.

Share

Related articles

AI at Work

7 Generative AI Use Cases in Enterprise Marketing

Neelam Goswami · Content Marketing Associate

November 12th, 2024 · 15 min read

Partnerships

Accelerating Enterprise GenAI Across the Microsoft Ecosystem

Typeface

July 18th, 2023 · 3 min read

AI at Work

Common Content Marketing Mistakes You Can Avoid with Generative AI

Ashwini Pai · Senior Copywriter

June 13th, 2025 · 10 min read