AI at Work

Why Do Executive Expectations Clash with End User Reality in AI Rollouts? (And How to Bridge the Gap)

Akshita Sharma · Content Marketing Associate

August 30th, 2025 · 12 min read

The leadership approved an AI investment after a compelling boardroom pitch. But six months later adoption is sluggish, teams are frustrated, and you're questioning whether you moved too quickly. This scenario is playing out across many enterprises daily. In fact, a recent MIT study found that 95% of AI pilots show no measurable ROI, yet.

While 87% of executives anticipate revenue growth from generative AI tools, only 4% of companies are able to extract meaningful value from their investments.

The problem is the chasm between executive vision and ground-level execution, not AI.

Too many organizations sprint toward deployment without considering what happens next. Teams find themselves wrestling with complex new workflows, armed with little context, unclear processes, and conflicting expectations about what success looks like.

To understand how companies can bridge this gap, we spoke with Ross Guthrie, Customer Success Manager at Typeface, who works daily with marketing teams navigating their AI transformation journeys.

His insights reveal why executive expectations clash with end user reality, and what organizations can do to create smoother, more effective AI rollouts.

Why do exec expectations clash with end user reality?

When executive expectations clash with the experience of end users, it’s usually because leaders focus on AI's potential — while users grapple with AI's practical limitations. It's a classic case of vision meeting reality.

Guthrie puts it well: "The promise and potential of emerging technology always extends far beyond the current state. This naturally creates a tension between what is and what can be."

While executives plan aggressive timelines, with many expecting 78% of ROI within 1–3 years or 31% within just six months, the ground-level reality is that most organizations need 12 months or more just to resolve adoption challenges.

The misalignment creates a situation where executive urgency crashes into the messy, complex reality of getting people and systems to actually work with AI effectively.

No wonder 80% of AI projects fail at twice the rate of traditional IT implementations. The expectations simply aren't aligned with what it takes to make AI work in the real world.

But why does this gap persist across so many organizations?

We found that there are three critical dynamics that drive the disconnect between boardroom ambitions and operational realities:

1. Speed versus readiness paradox

Guthrie recalls hearing early in his career: "fast, cheap, high-quality—pick two."

It's the classic triangle that forces trade-offs. You can deliver something quickly and affordably, but quality suffers. Or you can have high quality at speed, but it won't be cheap.

Yet when it comes to AI implementations, many executives seem to believe they can bypass this constraint entirely. They want rapid deployment, minimal investment, and exceptional results, all at once.

"If you've got a budget to deploy, that capital does nothing for you sitting still," Guthrie explains. For Fortune 500 companies, the return potential is genuinely massive. "100k investments can easily generate 10x in output."

However, this promise of massive returns hinges on a critical caveat: smooth, immediate adoption across teams and workflows.

It's here that the disconnect emerges.

And the disconnect manifests differently at each level of the organization:

Executives operate from a strategic vantage point where AI represents billion-dollar market opportunities. They see competitors moving fast and understand that delayed deployment means lost revenue.

Marketing teams navigate today's messy reality: learning new workflows, managing quality concerns, and maintaining their existing responsibilities while adopting transformative technology.

IT departments get caught in the middle, tasked with rapidly deploying systems while ensuring security, governance, and compliance

Everyone's moving fast, but they're not necessarily moving in the same direction. And that's where even the most promising AI initiatives can quickly derail.

2. Rushing from pilot to full scale without proof points

The expectation gap becomes most visible when promising pilots encounter scaling challenges.

Guthrie recently worked with a multinational manufacturer exploring AI for account-based marketing (ABM).

"The executive team saw the promise immediately and instantly tasked their team, who all had 'day jobs', with tackling this problem with their 500 top accounts," he recounts.

But instead of rushing toward the full vision, Guthrie’s team conducted a transformation assessment that revealed the current state of their marketing operations.

"We said, let's start with one customer and prove that. That will tell us in 6 months how we can solve all of this. Then we make a plan and go forward."

The approach of starting with proof points rather than performance targets allowed the leadership to see concrete progress while giving the marketing team time to develop competency.

The lesson here extends beyond any single implementation: successful AI adoption requires aligning executive timelines with organizational learning curves.

Teams using an AI platform like Typeface can demonstrate immediate value through improved content production speed and brand consistency, while building organizational muscle for more complex personalization and campaign optimization over time.

3. When teams are not given room to fail

"In technology, mistakes are expensive," Guthrie notes. "Not because it's expensive to deploy tech but because the disruption and churn that it creates pulls energy from everything it touches. Which is why you want to get it wrong FAST, if you're getting it wrong."

This reveals why many AI rollouts fail, as organizations optimize for speed of deployment rather than speed of learning.

58% of CMOs admit investing in AI technologies before understanding their value, driven by competitive pressure rather than strategic clarity.

"Leadership wants their team to take risks," Guthrie observes. "But, historically, employees aren't rewarded for failures. This is the fundamental disconnect."

The result is often expensive course corrections that could have been avoided if your success criteria made space for failure or mistakes.

Guthrie explains, "Success is getting to a proof point. That is your starting point. Failure is thinking you need to get to a specific threshold: i.e. 10% conversion lift."

How do you build a bridge between AI vision and execution?

The most successful AI transformations don't start with mandates from the boardroom — they begin when executives roll up their sleeves and participate in learning alongside their teams.

While introducing Typeface to marketing teams, Guthrie often encounters the same few concerns:

"I don't know anything about AI. How can I do this?"

"Will it be hard to use?"

"How can we trust that it won't make a mistake?"

"Will it damage our brand?"

These questions reveal that the gap between AI's promise and people's readiness is deeply human. Teams need psychological safety to experiment with AI tools that could reshape how they work.

Guthrie explains, "Leadership support means absorbing the risk so your teams can explore the unknown. It means publicly embracing the idea that early failure is not only expected—it's productive. That kind of support doesn't just lead to better AI outcomes. It builds a culture where innovation can actually take root."

Here are some strategies organizations can employ to build the bridge between AI vision and execution:

1. Start with organizational learning

"Early deployments of emerging tech benefit from fast and cheap solutions that start with small value — 100k, 300k. But what you gain in organizational learnings and cultural shift is worth hundreds of millions over 5 years."

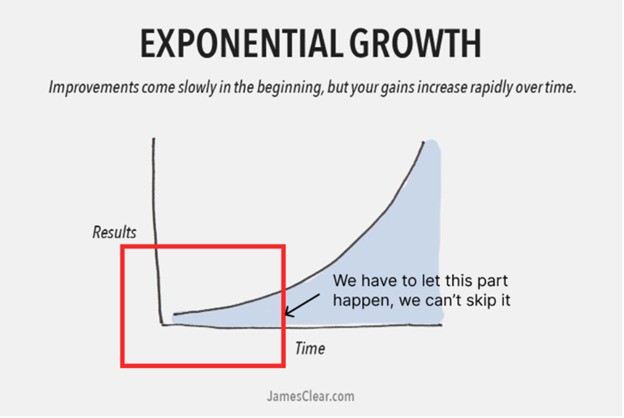

This perspective shifts the focus from immediate ROI calculations to building sustainable competitive advantage. Organizations that treat initial AI investments as capability building rather than performance improvement position themselves for long-term success. They are able to capture exponential value as the technology matures, and their teams become fluent in AI-augmented workflows.

This approach requires patience, but it pays dividends in ways that are hard to quantify upfront. Teams develop intuition about when AI helps and when it doesn't. They learn to prompt effectively, review outputs critically, and integrate AI seamlessly into existing processes. Most importantly, they overcome the psychological barriers that often derail AI adoption.

For our customers, this often means beginning with specific content types or audience segments where improvement represents pure gain. Teams can learn AI workflows while serving previously underserved customer segments or creating content that would otherwise require expensive agency support.

2. Redefine success as discovery

"One of the most important strategies I've learned is to reframe success as learning, not lift," Guthrie emphasizes. "Too often, teams define success in terms of performance metrics before they've even explored the terrain."

Traditional business metrics like efficiency gains, cost savings, or output increases can actually become counterproductive in early AI deployments.

They create pressure to optimize before you understand what you're optimizing for.

As we alluded to above, Guthrie advocates for what he calls a "proof-point strategy" that focuses on answering foundational questions: What happens when we try this? What breaks? What works?

This discovery mindset creates psychological safety for experimentation. Discovery-focused teams uncover insights that performance-focused teams miss entirely. They notice unexpected use cases, identify integration challenges before they become roadblocks.

3. Allow space for experimentation

"The most effective support I've seen from leadership isn't just about funding or headcount. It's about creating cover," Guthrie notes. Successful AI rollouts require leadership that says, "We expect this to be messy. We're not chasing perfection — we're chasing proof."

Teams might spend hours learning to prompt effectively, testing different approaches, or figuring out how to integrate AI outputs into existing workflows. To observers, this can appear inefficient compared to traditional execution. But leaders who understand the long-term game recognize this apparent inefficiency as essential capability building.

This support manifests in specific actions:

Protecting teams from premature scrutiny during learning phases

Making space for experiments that might not work

Redefining success as reaching proof points

Running interference with other stakeholders who might pressure for quick wins

This approach works especially well when the technology itself provides safety guardrails. Typeface's pre-approved templates, brand voice preservation, and workflow management features allow teams to experiment confidently while maintaining quality standards.

What does realistic progress look like?

End users see that fast and cheap, yet maniacally focused, is the way to go.

Executives see what can be in 5 years and drive intensely towards that.

But according to Guthrie, realistic progress often looks slow—like compounding interest. "The trick is aligning expectations around that curve", he says.

Organizations should be reframing what success looks like at each stage:

In months 1-3 | In months 4-12 | Beyond year one |

|---|---|---|

Teams successfully completing basic workflows without constant troubleshooting | Consistent quality that reduces review cycles | Strategic applications like personalization at scale |

Quality output that meets brand standards 80% of the time | Integration with existing tools and workflows | Creative breakthroughs that weren't possible before |

Reduced time-to-first-draft, even if final output still requires human refinement | Team confidence in using AI for routine tasks | Compound time savings that free teams for higher-value work |

Clear documentation of what works and what doesn't | Measurable efficiency gains in specific use cases | ROI that justifies executive investment expectations |

Key takeaways

The most successful AI transformations share common approaches that any organization can adopt:

Plan for learning curves, not learning cliffs: Budget 12+ months for meaningful transformation while celebrating shorter-term wins that build organizational momentum

Start with proof points, not performance targets: Focus on demonstrating possibility rather than immediately optimizing outcomes during initial implementation phases

Invest in organizational capability: Allocate equal resources to change management and training as you do to technology licensing

Create safe experimentation environments: Shield teams from premature scrutiny while they develop competency, using platforms with built-in governance to enable confident exploration

Align success metrics with reality: Measure learning and capability building alongside performance improvement, especially in early stages

The organizations succeeding with AI aren't necessarily those with the biggest budgets or most advanced tools. They're the ones where leadership understands that executive vision and operational reality exist on different timelines and they actively manage the bridge between them.

As Guthrie puts it: "In AI, success isn't about getting it perfect — it's about proving it's possible."

The executives who embrace this mindset while providing their teams with an AI platform like Typeface that enables safe experimentation within enterprise-grade governance position their organizations for successful AI rollouts rather than expensive pilot purgatory.

Try Typeface today and help your teams prove what's possible! Book a demo.

Share

Related articles

AI at Work

Why AI Rollouts Fail Without Clear Leadership Context (And How to Fix It)

Ross Guthrie · Applied AI Strategist

July 16th, 2025 · 14 min read

Product

Accelerating AI Content Lifecycle Transformation: Unveiling Typeface Arc Agent, Strategic Acquisitions, and Multimodal Product Innovation

Typeface

September 16th, 2024 · 6 min read

AI at Work

7 Generative AI Use Cases in Enterprise Marketing

Neelam Goswami · Content Marketing Associate

November 12th, 2024 · 15 min read