AI at Work

AI and Advertising Governance: How to Accelerate with Confidence Using AI in Digital Advertising

Neelam Goswami · Content Marketing Associate

August 18th, 2025 · 14 min read

In the race to capture attention and drive results, marketing leaders are under constant pressure to deliver ad campaigns faster and at greater scale.

AI in digital advertising is taking a lot of this pressure off marketing teams, from speeding up ad creative production to hyper-personalizing campaigns across multiple platforms simultaneously.

But for CIOs overseeing enterprise adoption, that speed comes with critical questions:

How do we maintain brand integrity across thousands of ad variations?

What about regulatory compliance in advertising across different markets?

Can we scale programmatic advertising responsibly?

As businesses increasingly rely on AI to generate ad copy, create display creatives, personalize for micro-audiences, and optimize ad campaigns 10X faster across Google Ads, Facebook, LinkedIn, and other platforms, the stakes are getting higher. A single AI-generated advertisement misaligned with brand values or violating advertising regulations can damage years of brand building and result in costly regulatory penalties.

This underscores the importance of adopting robust approval workflows, bias mitigation strategies, and brand-safety checks, so that marketing teams can confidently accelerate their digital advertising efforts without compromising trust or compliance.

To explore these important governance challenges, we sat down with Mohit Kalra, Chief Information Security Officer at Typeface, and Saachi Shah, Product Manager at Typeface, who shared their insights on building effective AI governance frameworks for advertising at enterprise scale.

Where things can go wrong: Risks of unchecked AI advertising

Brand safety

Without proper guardrails when using AI in digital advertising, you might end up with language that doesn't align with your brand voice, visuals that contradict brand aesthetics, or messages that seem out of context for a target audience or market. These brand safety issues can erode consumer trust and require expensive damage control efforts.

In Shah’s experience through interactions with clients, maintaining brand consistency across all content is one of the biggest challenges they face.

It’s hard to create on-brand content across the board (by country, by product, etc.). Things especially fall apart when you get to the OU (operational unit) level. They will often churn out off-brand content due to time constraints or simply not knowing, and publish it.

Legal risks in advertising

Advertising regulatory frameworks and evolving platform policies require organizations to be especially vigilant. AI systems could sometimes generate content that inadvertently makes unsubstantiated claims, violates truth-in-advertising regulations, or fails to include required disclosures. Inherent bias in the data that AI algorithms are trained on can even lead to discriminatory advertising practices. That is why choosing AI platforms with strong enterprise guardrails is critical.

Transparency and data privacy in ad targeting

AI in digital advertising often works with vast amounts of consumer behavioral data for ad targeting and personalization, raising significant privacy concerns. Without proper governance, AI systems may process personal information for ad targeting in ways that violate data protection regulations like GDPR or CCPA. The "black box" nature of many AI advertising platforms makes it difficult to explain targeting decisions to regulators or comply with transparency requirements for personalized advertising.

Kalra emphasizes the need for awareness and training to ensure compliance with privacy regulations.

Organizations need to be aware of regulatory AI compliance frameworks globally and ensure adherence to these frameworks at every level.

Developers and users require training and established guidelines on how to use AI without putting company and customer information at risk.

AI-driven processes must be explainable, with clear disclosures about how data is used and how personalization decisions are made.

Why AI governance matters in advertising

Effective governance transforms AI in digital advertising into a strategic advantage rather than a potential liability, by establishing clear boundaries, accountability, and quality controls.

Having robust governance frameworks enables organizations to move quickly and confidently, knowing that proper safeguards are in place to prevent costly ad disapprovals, campaign suspensions, or regulatory violations. Strong governance structures also demonstrate to advertising platforms, regulators, and customers that the organization takes its responsibilities seriously when deploying AI technologies.

Building approval workflows for AI in digital advertising

Successful AI advertising governance requires carefully designed approval workflows that balance campaign launch speed with human oversight of ad content and targeting parameters.

These workflows should identify significant decision points where human review is essential (such as high-spend campaigns or sensitive audience targeting) while allowing AI systems to operate autonomously for low-risk ad variations and A/B tests.

What are the key components of AI advertising governance?

1. AI usage policies

Define what AI can and cannot generate by establishing clear boundaries for AI applications in your advertising operations. These policies should specify approved use cases, prohibited content types, and quality standards that AI-generated ad content must meet.

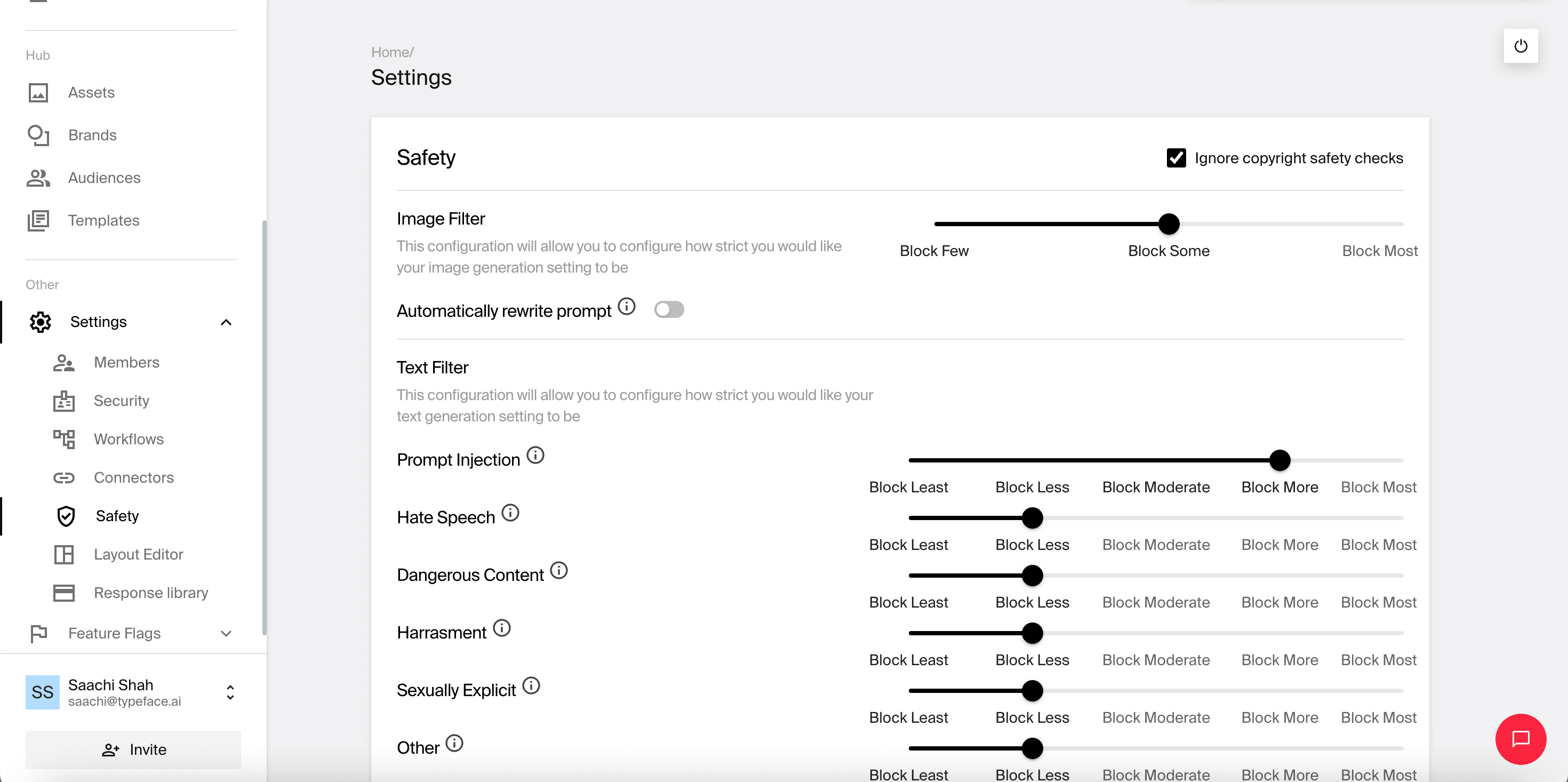

Responsible AI settings on Typeface give you better control over your AI usage policies

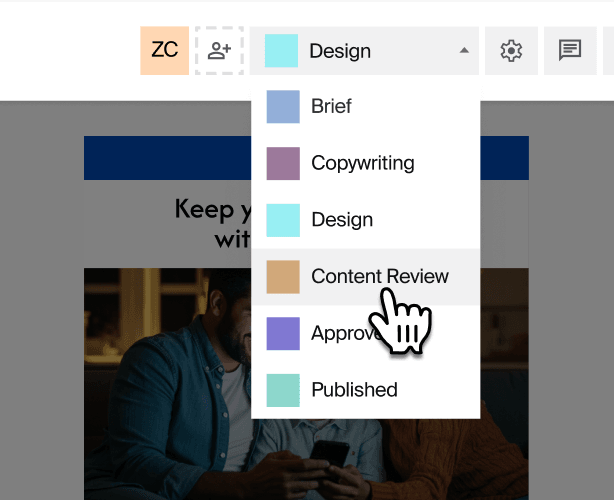

2. Approval workflows

Set human sign-off points before your ads go live by implementing a multi-tiered review process that involves relevant stakeholders. Identify who needs to approve different types of content and establish clear criteria for approval. The smoother these approval workflows can be, the faster your ads get to market, so consider using content workflow automation tools that can streamline the process.

Build custom workflows with automation on Typeface

3. Audit trails

Make sure you’re logging every step, version, and decision in the AI process to maintain comprehensive records of how advertising content is created and approved. Detailed audit trails enable organizations to demonstrate compliance, identify patterns in AI decision-making, and quickly trace the source of any issues that arise after publication. Pick a marketing AI platform that saves audit trails for all content and offers clear visibility.

4. Role-based access controls

To ensure strict adherence to brand rules and compliance, it's important to have control over who can create campaigns, set targeting parameters, or publish AI-generated advertising content. Use granular permission systems that make sure team members can access and modify AI systems based on their role.

Role-based access controls should reflect organizational hierarchies and expertise levels, preventing unauthorized changes to AI platform settings or generated content.

How can enterprises enforce brand safety and regulatory compliance in AI ad generation?

Kalra believes that AI governance is a shared responsibility.

We've learned that governance isn’t just about the features we build into the Typeface platform — it’s also about the invisible frameworks and operational rigor behind the scenes. While we provide tools to enforce brand, legal, and safety guidelines, a significant portion of governance involves foundational processes that must be thoughtfully designed and continuously maintained — many of which aren't directly visible to the end user.

Additionally, we’ve seen first-hand that much like other trust-based systems, both the platform provider and the user play critical roles in ensuring outputs are safe, compliant, and on-brand. Providers must deliver guardrails, transparency, and accountability, while users must apply these tools responsibly within their own workflows and content standards.

Here are some key steps enterprises can take toward better AI governance in advertising campaigns.

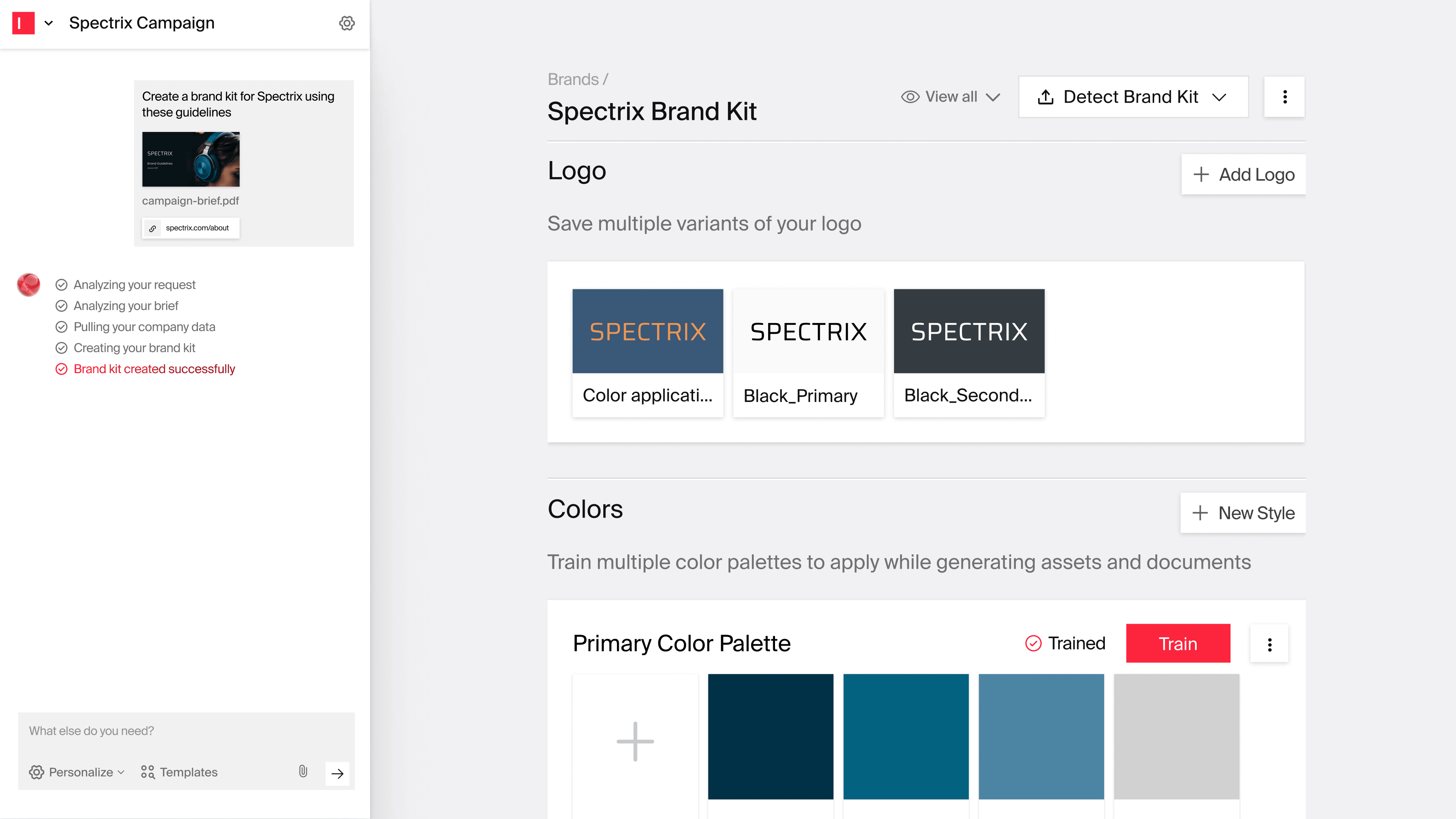

Training AI with advertising brand guidelines and assets

Embedding advertising-specific brand guidelines, campaign rules, and approved creative assets directly into AI systems gives them more context for creating effective ad content — from ad copy styles to approved color palettes to campaign messaging frameworks.

Shah points out that most organizations struggle to maintain a single source of truth.

Brand managers maintain guidelines across various sources — a PDF for overarching brand principles, a Figma file with visual identity standards, a Notion doc for tone of voice, and scattered emails or Slack messages with the latest updates.

Due to the disparate nature of these sources, it's easy for teams to reference outdated or inconsistent information, leading to off-brand executions and internal confusion. This fragmentation slows down creative workflows, increases the likelihood of errors, and undermines trust in the brand.

Typeface solves this problem by letting users save all brand guidelines, audiences and assets in the Brand Hub. Our enterprise marketing AI platform integrates with your data asset management (DAM) systems, content management systems (CMS), and knowledge bases, allowing you to easily import existing repositories.

Typeface organizes them for you into a rich content graph, with semantic search to help you find exactly what you need — documents, images or other brand assets — without the hassle of tagging every asset.

Brand Hub on Typeface

Not only does a unified Brand Hub make it easier for teams to find brand guidelines and assets, but it also ensures that all AI-generated content naturally aligns with brand standards from the outset.

How does this work? According to Shah, “Brands typically start by sharing their brand guidelines — these could be their audience details, brand guardrails, tone of voice, writing structure, and more. Once these guidelines and assets are shared, the process of training their Typeface brand kit starts. Our platform will ingest the documents and extract rules from them. These rules can be reviewed and also created by channel. Once that is done, we’re all set. The user can start generating content and those brand rules would be followed.”

Now, if you ask the Typeface agent to generate a LinkedIn sponsored content ad or a Google Ads campaign, the Ad Agent automatically collaborates with the Brand Agent to understand and apply your advertising brand guidelines and target audience parameters.

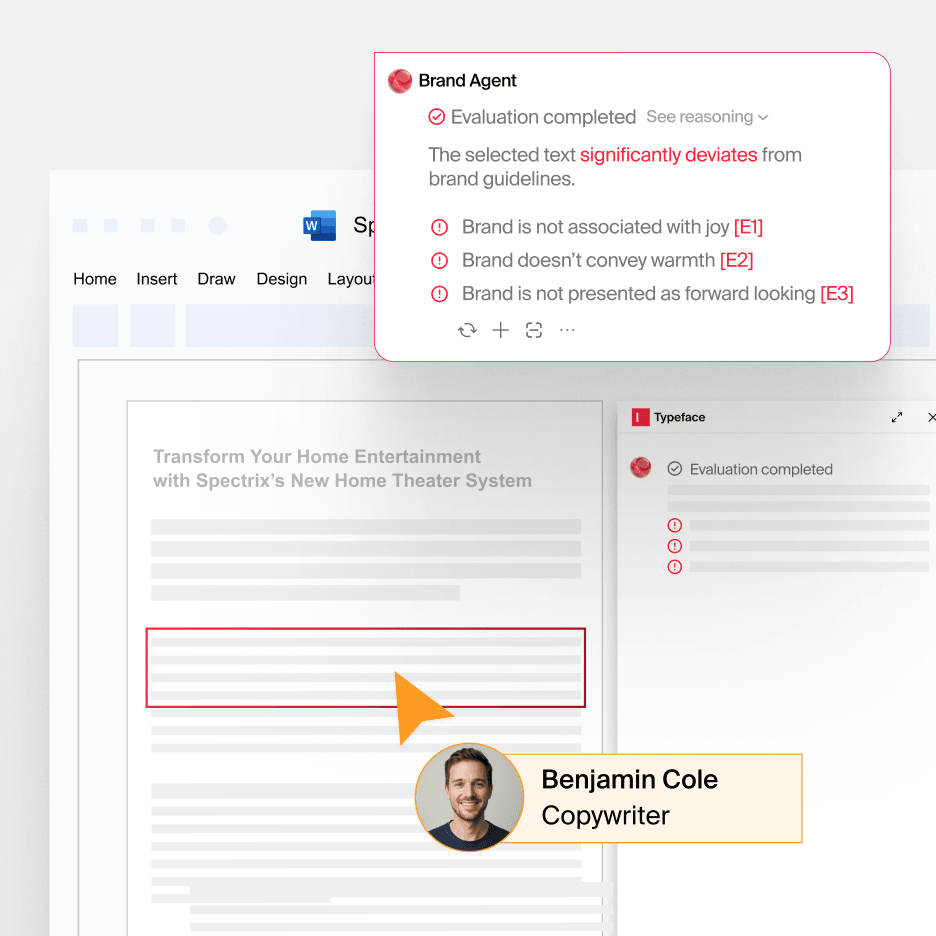

Brand alignment checks

Establishing systematic brand alignment verification processes that work effectively can make ad governance a lot easier, especially when collaborating with external agencies or freelancers.

Kalra explains, “AI creates content at scale, and that requires careful curation before it’s published externally. This curation may be through humans in the loop or through automation, which in turn may use more AI to review the generated content. Having proper approval workflows is also very important for advertising governance.”

Brand Agent on Typeface, for instance, can analyze any content to determine if it adheres to your brand guidelines. It offers clear reasoning for content that is flagged and even offers improvement suggestions where needed.

Typeface Brand Agent

Explainable AI

Deploy explainable AI systems that provide transparency into decision-making processes, adding an additional layer of assurance for brand safety. When AI systems can articulate why they made specific choices, human reviewers can more effectively evaluate the appropriateness of the output and identify potential issues.

Seamless and transparent workflows

Transparency and accountability throughout campaign creation is essential for advertising governance. Established workflows with clear roles are at the heart of successful AI implementation in paid media.

Workflow management features on your marketing AI platform are non-negotiable for scaling campaign production while maintaining quality and brand consistency.

Typeface allows you to build custom advertising workflows with automations like auto-assigning ad review tasks and sending notifications to stakeholders when ads move between approval stages.

Workflow automation on Typeface

These automations ensure smoother campaign handoffs while audit trails and versioning provide complete transparency in AI advertising content creation.

Responsible AI implementation

Responsible AI use in advertising requires implementing bias detection in audience targeting, ensuring ad personalization meets privacy requirements, and maintaining transparency in campaign decisions.

Typeface's enterprise-grade platform priorities trust and safety and provides robust input filtering to block inappropriate advertising prompts and comprehensive output review systems that automatically flag content violating ethical guidelines or platform policies. The platform also implements C2PA standards to embed verifiable credentials in AI-generated ads, helping stakeholders identify AI-created content and prevent misinformation.

Enterprise data security

Protecting sensitive information and maintaining compliance with data protection regulations is imperative, irrespective of how you create content.

This requires strong encryption, access controls, data minimization practices, and regular security audits. With Typeface’s integrated security and privacy controls, upholding data safety standards is a lot easier. You have complete control over your business data which is end-to-end encrypted, with full ownership of all creative inputs and generated output.

Enterprise-grade authentication and granular role-based access control add another layer of checks to maximize data security.

What’s the role of human oversight in AI-led advertising?

AI suggests, humans approve: Implement systems where AI generates suggestions and recommendations, but humans retain final approval authority for campaign assets.

Typeface allows users to incorporate human oversight and input from the very start by saving approved brand guidelines, ad templates, product images, and other assets, making governance more proactive rather than reactive.

Vetting AI tools, models and datasets: According to Kalra, companies need to have an AI mindset from the get-go.

Organizations need to have a good model and dataset onboarding processes when embedding AI in their products and need to closely review AI solutions when incorporating AI in their workflows. New models and datasets should always be vetted before adoption.

He also stresses the importance of choosing AI platforms carefully, saying, “Generic AI tools also produce output, but they lack enterprise guardrails and brand grounding. Therefore, you need to choose your AI tools wisely and evaluate not only their output generation but also their governance features.”

Team training and risk reduction: Train teams on AI tools and associated risks to reduce dependence on centralized authority and create a more resilient governance structure.

As Kalra emphasizes again, “AI content creation is not a one-shot activity but requires thoughtful prompting, iterative improvements, and feedback loops,” and well-trained teams can make better decisions about when to escalate issues and how to effectively collaborate with AI systems.

Frequently asked questions about AI in digital advertising

How do I know if my AI advertising governance is sufficient?

Effective governance should provide clear decision-making criteria, maintain comprehensive audit trails, and regularly demonstrate compliance with relevant regulations. Regular audits and stakeholder feedback can help assess governance effectiveness.

What's the biggest risk of inadequate AI advertising governance?

The most significant risks include brand damage from inappropriate content, legal penalties for regulatory violations, and loss of consumer trust due to privacy or bias issues. These consequences can far exceed the cost of implementing proper governance.

How can small businesses implement AI advertising governance without extensive resources?

Small businesses can start with basic policies and approval workflows, leveraging existing compliance processes and gradually expanding governance capabilities as their AI usage grows. A common best practice is to require human sign-off on any AI-generated ad before it goes live.

In other words, use AI to automate content creation and bulk checks, but keep a marketer or editor in the loop to catch anything nuanced or unexpected.

How often should AI advertising governance frameworks be updated?

Governance frameworks should be reviewed at least quarterly and updated whenever new regulations emerge, significant AI capabilities are added, or audit findings identify gaps. Regular updates ensure continued effectiveness as the technology and regulatory landscape evolve.

How should marketing and IT collaborate on AI ad governance?

Effective AI governance in advertising is a cross-functional effort. Teams typically divide responsibilities as follows:

Marketing: Defines brand guidelines, content standards, and creative parameters for AI-generated ads.

IT/Data: Provides and secures the AI technology, ensures data privacy, and integrates AI tools into the ad tech stack.

Legal/Compliance: Monitors advertising laws and platform policies to guide AI usage.

Build AI content workflows confidently with Typeface

Looking for an enterprise AI marketing platform that cares about company and customer data privacy, responsible AI and content governance? Book a demo of Typeface today to discover true trust and safety in AI content creation.

Share

Related articles

AI at Work

5 Google Ad Examples & How to Use AI Ad Generator to Create Them

Akshita Sharma · Content Marketing Associate

April 1st, 2025 · 15 min read

AI at Work

AI Brand Management: How to Maintain Brand Consistency With AI Image Generators

Neelam Goswami · Content Marketing Associate

November 26th, 2025 · 13 min read

AI at Work

Content Quality Control in AI Marketing: Enterprise Governance and Best Practices for Brand Integrity

Mohit Kalra · Chief Information Security Officer

May 6th, 2025 · 13 min read